Machine Learning Algorithm

Machine learning (ML) is a powerful branch of artificial intelligence (AI) that enables computers to learn from data and make predictions or decisions without being explicitly programmed.

This is the first article in the series. We will start with the most basic one. For beginners, the vast number of algorithms can be daunting. One of the simplest and most foundational algorithms in Machine Learning is Linear Regression.

In this guide, we’ll delve deep into Linear Regression, explaining it step by step with practical examples to make the concept crystal clear. Before diving into Linear Regression, it’s essential to understand what a machine learning algorithm is.

What is a Machine Learning Algorithm?

Simply put, a Machine Learning Algorithm is a set of mathematical instructions or rules that guide a computer to find patterns in data and make predictions based on those patterns. These algorithms can be classified into several categories, such as supervised learning, unsupervised learning, and reinforcement learning. Linear Regression falls under the supervised learning category, where the model learns from labelled data.

What is Supervised Learning?

Supervised learning is a method in machine learning where we teach a computer to make predictions by showing it examples with known answers. We use labelled data, which consists of input values paired with their correct outputs, to train the computer.

For instance, if we want to predict house prices, we might provide the computer with labelled data that includes features of different houses (like size and location) aund their actual sale prices. The computer learns to identify patterns from this data to make its own predictions. After training, the computer is tested with new examples to see how well it performs.

One common technique in supervised learning is linear regression, which helps us predict numerical values based on other variables.

Understanding Linear Regression

Linear Regression is one of the simplest and most widely used algorithms in machine learning. It’s primarily used for predictive modelling when the relationship between the input (independent variable) and output (dependent variable) is linear.

In other words, Linear Regression tries to fit a straight line (also known as a regression line) through the data points in such a way that the difference between the predicted values and the actual values is minimized.

For Example: you run a bicycle rental shop in a city and want to predict the number of bicycles that will be rented out each day based on weather conditions. You have a dataset with information on past rental days, including features like daily temperature, humidity, and the number of bicycles rented. This dataset is your labelled data, where the weather conditions are the inputs (independent variables), and the number of bicycles rented is the output (dependent variable).

Key Concepts:

- Dependent Variable (Y): The number of bicycles rented each day. This is the outcome you’re trying to predict.

- Independent Variable (X): The weather conditions, such as daily temperature or humidity, which are used to make the prediction.

- Slope (m): The rate at which the number of bicycles rented changes with respect to changes in weather conditions. For example, if the temperature increases, the slope indicates how much the number of rentals is expected to increase.

- Intercept (b): The number of bicycles rented when the weather conditions are at their baseline level (e.g., zero degrees or 0% humidity). This is the starting point of the regression line when the independent variable is zero.

- Regression Line: The straight line that best fits the data points in a graph where the x-axis represents the weather conditions and the y-axis represents the number of bicycles rented. This line shows the predicted relationship between the weather conditions and the number of rentals.

The formula for a simple Linear Regression is:

Y = mX + bPractical Step-by-Step Example: Linear Regression in Action

How Linear Regression can be applied in a real-world scenario: predicting bicycle rentals based on weather conditions.

Step 1: Collect Data

First, gather your data. In this example, we have a dataset that includes daily weather conditions (temperature) and the number of bicycles rented each day.

| Temperature (°C) | Rentals |

| 15 | 50 |

| 20 | 80 |

| 25 | 120 |

| 30 | 150 |

| 35 | 200 |

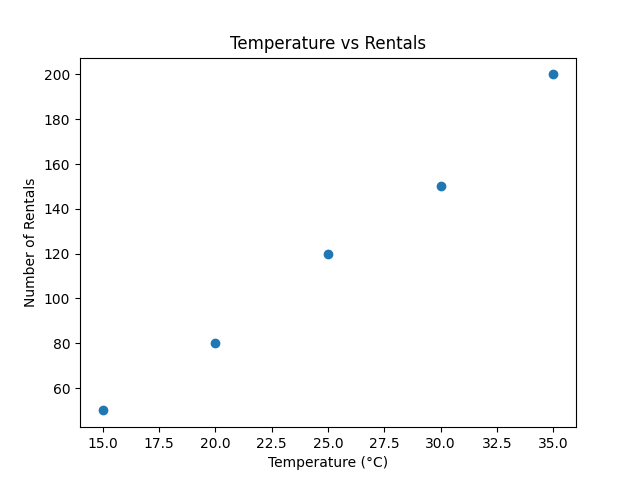

Step 2: Visualise Data

Visualizing the data helps in understanding the relationship between temperature and bicycle rentals. You can create a scatter plot with temperature on the x-axis and rentals on the y-axis.

import matplotlib.pyplot as plt

# Data

temperatures = [15, 20, 25, 30, 35]

rentals = [50, 80, 120, 150, 200]

# Plot

plt.scatter(temperatures, rentals)

plt.xlabel('Temperature (°C)')

plt.ylabel('Number of Rentals')

plt.title('Temperature vs Rentals')

plt.show()

This plot shows a clear upward trend, indicating that as the temperature increases, so do the bicycle rentals.

Step 3: Apply the Linear Regression Algorithm

Next, we are using Linear Regression to find the best-fitting line through the data points.

Using Python’s scikit-learn library:

from sklearn.linear_model import LinearRegression

import numpy as np

# Data

temperatures = np.array([15, 20, 25, 30, 35]).reshape(-1, 1)

rentals = np.array([50, 80, 120, 150, 200])

# Create model

model = LinearRegression()

model.fit(temperatures, rentals)

- Reshape Data: The

reshape(-1, 1)function converts the 1D array of temperatures into a 2D array becausescikit-learnexpects the input data to be in this format. - Model Creation: We create an instance of the

LinearRegressionclass and fit (train) it using the temperature and rental data.

Once the model is trained, we can find the slope (m) and intercept (b) of the regression line.

# Find slope (m) and intercept (b)

slope = model.coef_[0] # Access the slope (coefficient) of the regression line

intercept = model.intercept_ # Access the intercept of the regression line

print(f"Slope (m): {slope}")

print(f"Intercept (b): {intercept}")- Slope (m): The slope indicates how much the number of rentals increases for each degree increase in temperature.

- Intercept (b): The intercept represents the predicted number of rentals when the temperature is 0°C.

Slope (m): 7.4

Intercept (b): -65.0

The output of this Linear Regression model shows that:

- Slope (m): 7.4 – This indicates that for each 1°C increase in temperature, the number of bicycle rentals increases by 7.4 on average.

- Intercept (b): -65.0 – This means that if the temperature were 0°C (which is an extrapolation outside the data range), the model predicts -65 rentals, which doesn’t make practical sense in a real-world context but mathematically fits the line.

These values form the regression equation:

Rentals = 7.4×Temperature−65

This equation can now predict the number of bicycle rentals at different temperatures.

Now that we have the model, we can make predictions. For example, let’s predict the number of bicycle rentals at 22°C.

# Predict the number of rentals for a 22°C temperature

predicted_rentals = model.predict([[22]]) # Predict using the trained model

print(f"Predicted number of rentals at 22°C: {predicted_rentals[0]}")Finally, it’s important to evaluate how well the model performs. One common evaluation metric is Mean Squared Error (MSE).

from sklearn.metrics import mean_squared_error

# Predictions

predicted_rentals = model.predict(temperatures) # Predict rentals for the original data

# Calculate MSE

mse = mean_squared_error(rentals, predicted_rentals) # Compute the MSE

print(f"Mean Squared Error: {mse}")Mean Squared Error (MSE): This metric measures the average squared difference between the actual and predicted values. A lower MSE indicates better model performance.

Mean Squared Error: 22.0

An MSE of 22.0 suggests that the model’s predictions are reasonably accurate, but some discrepancy exists between predicted and actual values.

You can improve the model, such as adding more data, using different features, or trying more complex models.

Conclusion:

In this tutorial, we explored how Linear Regression can be used to predict the number of bicycle rentals based on temperature. You learned how to visualize data, create and train a model, make predictions, and evaluate the model’s performance.

This guide is a great starting point for anyone looking to understand machine learning basics, especially Linear Regression. If you have any questions or need further clarification, feel free to ask!

FAQs

Why Linear Regression?

Linear Regression is an excellent starting point for beginners because:

Simplicity: It’s easy to understand and implement.

Interpretable: The results are easy to interpret, making them ideal for explaining basic machine learning algorithm concepts.

Foundational: Many complex algorithms build on the concepts introduced in Linear Regression.

What is a machine learning algorithm in simple terms?

A machine learning algorithm is a set of instructions that helps a computer learn from data and make decisions. It’s like a recipe that guides the computer to predict outcomes.

How does Linear Regression work in machine learning?

Linear Regression works by finding a straight line that best represents the relationship between two variables, like house size and price, and uses this line to make predictions.

What are the key terms to know in Linear Regression?

Key terms include dependent and independent variables, slope, intercept, and the regression line, all of which help in making accurate predictions.

How to improve the linear regression model

To improve your linear regression model or provide more insights into its performance, you could explore further

1. Exploring Additional Features

- Add More Features: If you have more data, consider including additional variables (features) that might influence bicycle rentals, such as:

- Humidity: Higher humidity may reduce rentals.

- Day of the Week: Weekends may see higher rentals.

- Precipitation: Rainy days may result in fewer rentals.

By including more features, the model might capture more variability and improve prediction accuracy.

2. Using R-squared (Coefficient of Determination)

- R-squared (R²): This metric indicates how well the independent variable(s) explain the variability of the dependent variable. R² values range from 0 to 1, with values closer to 1 indicating that the model explains most of the variance in the target variable.

r_squared = model.score(temperatures, rentals)

print(f"R-squared: {r_squared}")3. Cross-Validation

K-Fold Cross-Validation: This method splits the data into ‘k’ subsets, training the model on ‘k-1’ subsets and validating it on the remaining subset. This process repeats ‘k’ times, and the average error across all ‘k’ trials gives a more reliable estimate of model performance.

from sklearn.model_selection import cross_val_score

# Perform 5-Fold Cross-Validation

cv_scores = cross_val_score(model, temperatures, rentals, cv=5, scoring='neg_mean_squared_error')

print(f"Cross-Validation MSE: {abs(cv_scores.mean())}")

Cross-validation provides a more robust evaluation by reducing the impact of random data splits. The average MSE across folds gives a better sense of model performance.

Pingback: Mean Squared Error (MSE) in Machine Learning - Simple Explanation